After having deployed a website to Windows Azure using the Azure accelerator for Umbraco, we are (occasionally) experiencing SQL Azure connection errors & timeouts from within the Umbraco backoffice. We have yet to discover the source of evil, but in the meanwhile I'm wondering if any of you ever experienced/resolved these kind of connection timeouts before? Any tips/tricks on how to trace/profile Umbraco on SQL Azure are welcome as well.

Some of the error messages we find in the Umbraco logs:

umbraco.DataLayer.SqlHelperException: Umbraco Exception (DataLayer):

SQL helper exception in ExecuteReader ---> System.Data.SqlClient.SqlException: A connection was successfully established with the server,

but then an error occurred during the pre-login handshake. (provider: TCP Provider, error: 0 - The specified network name is no longer available.)

umbraco.DataLayer.SqlHelperException: Umbraco Exception (DataLayer):

SQL helper exception in ExecuteReader ---> System.Data.SqlClient.SqlException: A transport-level error has occurred when receiving results from the server.

(provider: TCP Provider, error: 0 - The semaphore timeout period has expired.)

umbraco.DataLayer.SqlHelperException: Umbraco Exception (DataLayer):

SQL helper exception in ExecuteReader ---> System.Data.SqlClient.SqlException: Timeout expired.

The timeout period elapsed prior to completion of the operation or the server is not responding.

System.Web.HttpException (0x80004005): Unable to connect to SQL Server session database. ---> System.Data.SqlClient.SqlException (0x80131904): Timeout expired.

The timeout period elapsed prior to completion of the operation or the server is not responding.

About our setup:

Umbraco v4.7.1 (a rebranded umbraco website, originally created in 4.5.2 upgraded to 4.7.0 and 4.7.1)

I'm not sure about the retry policy. I'll have to digg into the code to find out if that is a potential issue. But i'd try to find out if the failed connections are still an issue with SQL Azure. The articles are somewhat old, so it might have been improved in the meantime. But at the same time I can understand why SQL Azure would terminate some connections.

I see Damiaan bet me to it, but how is your db for session management setup? Is it setup in its own db within the same affinity group.

Yes, we've noticed that SQL Azure does behave somewhat differently when it comes to handling/closing connections and throttling.

We do have some custom logic which queries the database, although I doubt if any of it is really hammering the database (will be doing a code walk through tomorrow though, just to make sure connections/transactions are closed properly, and might even throw in the retry policy while at it).

Regarding affinity group: I did not really create one, I've just made sure both hosted service & database are within the same region (Western Europe in this case). Session data is stored within the same database, can this be a problem?

Using the same database could cause the problem if this makes you using "excessive resource usage". In other words using the database too much could make other queries fail.

Quote: "In order to provide a good experience to all SQL Azure Database customers, your connection to the service may be closed due to the following conditions:

Excessive resource usage

Connections that have been idle for 30 minutes or longer

Failover because of server failures

" /Quote

This implies that if you are using the database too much, throttling will be activated on your connections. This is also the reason why you have to implement a retry logic. In case your queries are throttled, you need to retry.

Here you have a very good document on Azure SQL connection management. Maybe paying attention to the returned error codes (and logging them) could help you finding the real cause of the problem.

I really do hope sessions aren't causing any excessive usage as the number of users on our website is still very limited.

On the other hand I do think its a good idea to setup a seperate database for session data as the number of users will definitely grow once the website is launched.

I also don't think the custom queries we wrote are anywhere near resource consuming, with the exception of a worker role which is to synchronize lots of data (but has not yet been enabled on Azure).

Its hard to recognize "excessive resource usage" or timeouts though - what happens if an administrators republishes multiple nodes or the entire content tree for that matter? Will concurrent and/or consecutive queries fail? How long will the throttling be active?

Still quite some things I need to learn about (SQL) Azure..

Will look into the resources you provided, maybe those queries can shed some light. SQL isn't returning much error codes / throttling ID's though.

Using a separate database or using the Azure Cache for session management can be considered as best practice (but not most cost effective).

If the website isn't launched then I will not be the excessive resource utilisation as you mentioned, maybe it's another (connection issue). I think knowing the exact error code why your connection has been dropped, will be more helpful.

But still, implementing a retry logic should be a valid the workaround... If you to digg in to the umbraco DataLayer SqlHelper, you can see that no retry logic is currently implemented (as far as I can see).

> Its hard to recognize "excessive resource usage" or timeouts though

True! But as the guidelines and best practices suggest, add retry logic and investigate the return codes...

> What happens if an administrators republishes multiple nodes or the entire content tree for that matter?

Don't know by heart. Check your SQL Monitor on a local db :-) But I don't think this might make the biggest impact. As told before, it doesn't seem to be a throttling problem.

> How long will the throttling be active?

As far as I know, no details are disclosed. There is no algorithm :-)

> Still quite some things I need to learn about (SQL) Azure ;-)

SQLAzure support is looking into the timeout issues but so far they haven't found any errors related to throttling/connectivity/timeouts in the backend logs.

We redeployed the website to a new hosted service & storage account (now sharing an affinity group) and opted to create a seperate database for session data (we were not able to select an affinity group for the databases though, we only get the option to choose a region).

Although we still have to run some manual tests, so far we did not encounter any timeouts in the new setup. We do notice the back office responds much slower than it does on-premise though :(

We also still haven't found the root cause of the timeouts, which does worry me.

The timeouts also occur on the second deploy I mentioned earlier. Azure support is still looking into the issue and they have reminded us about the retry policy on several occasions. We have sent our code and they recommend we build a retry policy into Umbraco.

Due to a lack of resources and under customer pressure we were forced to deploy the website(s) to alternative hosting. Hopefully this will be a temporary situation as we have 2 equally large Umbraco projects scheduled for deploy on Azure.

I would like to know however if anyone has an idea of how big an effort it would be to develop a retry policy in Umbraco (v4.7) ?

Does the SQLHelper cover all database interaction ?

It turns out that session state was causing the timeouts after all. Once we configured AppFabric cache as session state provider the timeouts no longer occured.

Even though it's possible to maintain session state in SQL Azure (it's also the default in Umbraco accelerator setup) Microsoft does not officially support it!

Any chance I can get you to email (morten at umbraco dot com) me your findings and how you ended up configuring AppFabric? I'd like to make a note of it for an upcoming update of the Accelerator for Umbraco.

I've just found this thread while looking into a similar session state provider issues. Was just wondering how the app-fabric session state provider is working out for you, has this given you had any other issues to deal with? Also, are you running into any limitations (i.e sizes or the 10 concurrent connections on smaller size cache).

Also, to others, has anyone tried the Universal providers for asp.net that do officially support azure. Was thinking of trying these, but I'm wondering if it's just the retry logic that they've added or if this will stop the throttling issues i'm seeing?

We have been using the Appfabric cache for session state in all of our projects for close to 6 months now - and we haven't looked back since.

So far we did not run into any limitations using the 128Mb minimum cache size (for web sites with an average of 25-50k visitors per month) but I guess it also much depends on how often you're actually using session state which is usually very limited in our projects (mainly back-office).

We haven't seen any issues with concurrent connections but I believe these connections are well managed by the default session state provider for Appfabric cache.

So it seems that there are two different things at hand that need clarifying for this very important topic.

One is Windows Server AppFabric Caching ( see http://msdn.microsoft.com/en-us/library/ee790918%28v=azure.10%29.aspx ), and the other is handling transient conditions on SQL Azure. If I’m not mistaken, the AppFabric programming model will take care of the data cached by the application, but it will not change the fact that an SQL query fails to run because the connection was lost. Here lies the question: If a connection is lost, and a query is about to execute, will the AppFabric cache establish the connection once again before it actually runs? This is a very difficult case to replicate, but I believe the answer is no. (a connection was opened successfully, connection is then lost, and then a SQL script runs). Fail, right?

In order for the application to be reliable we must take care of handling transient conditions. For instance, implementing retry policies on all SQL-related methods in order to avoid connectivity issues when using Umbraco with SQL Azure.

Unfortunately still, there is no retry policy implemented in the CMS even though it is a recommended best practice by Microsoft.

For example, check out the source code on Umbraco v4.8 or older.

In the umbraco.presentation project, the umbraco.content.cs method “private XmlDocument LoadContentFromDatabase()” does not have a retry policy, as well as the umbraco.DataLayer.SqlHelper method “public IRecordsReader ExecuteReader(string commandText, params IParameter[] parameters)”.

The retry policy is missing in most, if not all, SQL related methods around the code.

On the upside, implementing such feature might not be such a difficult or time-consuming task for someone that knows their way around the code. To implement this functionality and get Umbraco running with SQL Database (SQL Azure) as Microsoft intends, check out:

This last link also discusses throttling. The CMS, or custom code (packages, etc) for that matter, might be affected by throttling when the code runs consecutive SQL connections. For instance, publishing many nodes in a consecutive fashion. If transient conditions occur, it will cause applications running slower than usual because of the way Azure Fabric works.

On the link, pay attention in particular to:

“SQL Azure comes with throttling behaviour the attributes of which can manifest themselves when a client is establishing connections to a SQL Azure database or running queries against it. The database connections can be throttled internally by the SQL Azure fabric for several reasons, such as excessive resource usage, long-running transactions, and possible failover and load balancing actions, leading to termination of a client session or temporary inability to establish new connections while a transient condition persists.”

I’m not an Azure expert, but I do believe these issues need to be addressed in order to get Umbraco running safely with SQL Azure.

@Anton, Yes and no. In version 6.1.0 of Umbraco we have fault handling in place for the new datalayer. But unfortunately the old datalayer is still used, so until we have gotten rid of all the usages of the legacy datalayer we don't have 100% support of fault handling on sql azure.

Good to hear about the new datalayer fault handling. I'm trying out this currently: Umbraco 6.1, Azure Web Site, standard config (one instance), plus Azure Sql. It was very easy to get up and running, (unzip 6.1, open in WebMatrix, publish to Azure, create a new Azure Sql DB, copy the config setting into Web Site, thats it) and is going very good so far.

@Jonas Are you running on Azure Websites or Azure Virtual Machine? I had issues before with the durability of files etc. on Azure Websites and switched to VMs. In 2013 i'm not sure where we stand on Azure support.

We are running Umbraco 6.0.5 for weeks in Azure websites (no vm, no webrole). Everything is running fine. Transient failures on Azure SQL are very infrequent (less than once / month).

Websites is good but currently it has its problems which might prevent it from being used for some site:

The sites bounce at least once a day, we think this is just the nodes getting restarted.

No Service Level Agreement as its still in preview

Does not support SSL

Can't hook up a custom domain without promoting the website to a shared/reserved instance which sucks for licencing by domain

You can't have multiple instances running the same site, you are stuck to one (lots of reasons for this but I'll savet those for a blog post).

Plus points are you can ram up to a bigger instance in a few seconds and its pretty cheap. The SQL Azure database is separate so if you go up to a VM later it makes life easier. The instances are pretty powerful and can handle some heavy loads so a single site might be perfectly adequate for most sites.

We currently have a 2 server VM setup site running well on Azure and for session state we've started using a RavenDB session state provider (as we needed Raven for part of the shopping cart provider). It seems to be performing fantastically from our tests but we have more to do before go live. Very impressed with the Raven setup, it clears out old sessions for you and replicates data between both servers automatically for redundancy. Mucho impressive.

Sorry to jump onto this post. I currently upgraded to Umbraco 7.2.8 and started seeing this Azure DB issue. I am looking into your solution of installing app fabric but I know for a fact that I don't have any session state stored anywhere.

From your experience do you think it might be something esle causing it?

In our case persisting the session data in SQL Azure resulted in frequent roundtrips and many connections from our application to the database which eventually had SQL Azure timeout at random times and for random requests. Using the distributed cache for session state fixed this as it really reduced the load on the database.

Since you are not using sessions the timeouts may be caused by any part of your application that is heavily interacting with the database even though the timeouts may occur sporadically and within a different context.

The main feedback we got from the SQL Azure support team was to implement a SQL retry policy (i.e. the transient fault handling application block) basically telling us to expect SQL Azure to timeout/throttle more often than an on-premise SQL instance would because of its distributed nature.

We managed to implement the transient fault handling fairly quickly into our custom data transactions and from my understanding this retry policy has now also been implemented in the Umbraco data layer. If you have any custom data transactions in your application I would strongly recommend to implement this strategy as well.

Recently I had a similar issue with a Azure hosted website. They are asking to implement retry logic. but I'm not sure from which version onward Umbraco implemented retry logic so I could upgrade the site.

Thanks for your reply and sorry for picking it up late.

There was a piece of code that was causing the issue it wasn;t anything to do with sessions but still that code was hitting the DB thats what I think. I didnt have time to debug the code so commented it out and added my footer navigation manually rather than generating it dynamically using the node tree. Below is that code that was causing issue and any help on why is doing that would be much appreciated!.

public static List<string> GetFooterSections(int id)

{

// get pre-defined footer sections from umbraco dropdown

var preValues = library.GetPreValues(id);

var footerSections = (from XPathNavigator section in preValues

from XPathNavigator child in section.SelectChildren("preValue", "")

select child.Value).ToList();

return footerSections;

}

The call to GetPreValues will hit the database which you definitely want to avoid in a footer navigation. As to why you are getting a transient error remains unclear; I believe that method uses the SQLHelper which should have the retry policy built in.

Avoiding the database call however could require some rearrangement of the content structure (i.e. avoiding dropdowns as data types for footer content). But in the end hitting the Umbraco cache will save you time and improve performance.

We had similar problem with Umbraco website hosted on Azure (with azure sql) . Almost constant database timeouts were driving me crazy. What helped in our case ( at least for the moment it seems like it helped ), was enabling distributedCall in umbracoSettings.config file.

SQL Azure connectivity issues

Hi,

After having deployed a website to Windows Azure using the Azure accelerator for Umbraco, we are (occasionally) experiencing SQL Azure connection errors & timeouts from within the Umbraco backoffice. We have yet to discover the source of evil, but in the meanwhile I'm wondering if any of you ever experienced/resolved these kind of connection timeouts before? Any tips/tricks on how to trace/profile Umbraco on SQL Azure are welcome as well.

Some of the error messages we find in the Umbraco logs:

About our setup:

SQL Azure support recommends in having a retry policy in place:

http://blogs.msdn.com/b/bartr/archive/2010/06/20/sql-azure-retry-code-update.aspx

http://peterkellner.net/2011/01/21/sqlazure-connection-retry-problem-using-best-practices/

Not sure if Umbraco has one built-in already?

As far as i know, umbraco has no retry policy build in.

You might get timeout's because your websites might be hammering the SQL database.

Possible reasons why umbraco is hammering the Database is publishing a node, or using the Relations API. (or you are doing some custom SQL logic).

Question: are you using also a SQL db for session management?

Hi Lennart,

I'm not sure about the retry policy. I'll have to digg into the code to find out if that is a potential issue. But i'd try to find out if the failed connections are still an issue with SQL Azure. The articles are somewhat old, so it might have been improved in the meantime. But at the same time I can understand why SQL Azure would terminate some connections.

I see Damiaan bet me to it, but how is your db for session management setup? Is it setup in its own db within the same affinity group.

- Morten

Hi Damiaan, Morten,

Thanks for your replies.

Yes, we've noticed that SQL Azure does behave somewhat differently when it comes to handling/closing connections and throttling.

We do have some custom logic which queries the database, although I doubt if any of it is really hammering the database (will be doing a code walk through tomorrow though, just to make sure connections/transactions are closed properly, and might even throw in the retry policy while at it).

Regarding affinity group: I did not really create one, I've just made sure both hosted service & database are within the same region (Western Europe in this case). Session data is stored within the same database, can this be a problem?

Grtz

L

Hi

Using the same database could cause the problem if this makes you using "excessive resource usage". In other words using the database too much could make other queries fail.

SQL Azure has a limit on the resources as stated in the "General Guidelines and Limitations SQL Azure - Connection Constraints".

Quote: "In order to provide a good experience to all SQL Azure Database customers, your connection to the service may be closed due to the following conditions:

" /Quote

This implies that if you are using the database too much, throttling will be activated on your connections. This is also the reason why you have to implement a retry logic. In case your queries are throttled, you need to retry.

Here you have a very good document on Azure SQL connection management. Maybe paying attention to the returned error codes (and logging them) could help you finding the real cause of the problem.

Thanks Damiaan,

I really do hope sessions aren't causing any excessive usage as the number of users on our website is still very limited.

On the other hand I do think its a good idea to setup a seperate database for session data as the number of users will definitely grow once the website is launched.

I also don't think the custom queries we wrote are anywhere near resource consuming, with the exception of a worker role which is to synchronize lots of data (but has not yet been enabled on Azure).

Its hard to recognize "excessive resource usage" or timeouts though - what happens if an administrators republishes multiple nodes or the entire content tree for that matter? Will concurrent and/or consecutive queries fail? How long will the throttling be active?

Still quite some things I need to learn about (SQL) Azure..

Will look into the resources you provided, maybe those queries can shed some light. SQL isn't returning much error codes / throttling ID's though.

Using a separate database or using the Azure Cache for session management can be considered as best practice (but not most cost effective).

If the website isn't launched then I will not be the excessive resource utilisation as you mentioned, maybe it's another (connection issue). I think knowing the exact error code why your connection has been dropped, will be more helpful.

But still, implementing a retry logic should be a valid the workaround... If you to digg in to the umbraco DataLayer SqlHelper, you can see that no retry logic is currently implemented (as far as I can see).

> Its hard to recognize "excessive resource usage" or timeouts though

True! But as the guidelines and best practices suggest, add retry logic and investigate the return codes...

> What happens if an administrators republishes multiple nodes or the entire content tree for that matter?

Don't know by heart. Check your SQL Monitor on a local db :-) But I don't think this might make the biggest impact. As told before, it doesn't seem to be a throttling problem.

> How long will the throttling be active?

As far as I know, no details are disclosed. There is no algorithm :-)

> Still quite some things I need to learn about (SQL) Azure ;-)

Me too :-)

Cheers

Here's a quick status update:

SQLAzure support is looking into the timeout issues but so far they haven't found any errors related to throttling/connectivity/timeouts in the backend logs.

We have reviewed our custom code and did not find any irregulartities concerning opening/closing of connections, and we added a retry policy as recommended (http://msdn.microsoft.com/en-us/library/hh680934(v=PandP.50).aspx).

We redeployed the website to a new hosted service & storage account (now sharing an affinity group) and opted to create a seperate database for session data (we were not able to select an affinity group for the databases though, we only get the option to choose a region).

Although we still have to run some manual tests, so far we did not encounter any timeouts in the new setup. We do notice the back office responds much slower than it does on-premise though :(

We also still haven't found the root cause of the timeouts, which does worry me.

Here's another update:

The timeouts also occur on the second deploy I mentioned earlier. Azure support is still looking into the issue and they have reminded us about the retry policy on several occasions. We have sent our code and they recommend we build a retry policy into Umbraco.

Due to a lack of resources and under customer pressure we were forced to deploy the website(s) to alternative hosting. Hopefully this will be a temporary situation as we have 2 equally large Umbraco projects scheduled for deploy on Azure.

I would like to know however if anyone has an idea of how big an effort it would be to develop a retry policy in Umbraco (v4.7) ?

Does the SQLHelper cover all database interaction ?

Grtz

L

It turns out that session state was causing the timeouts after all. Once we configured AppFabric cache as session state provider the timeouts no longer occured.

Even though it's possible to maintain session state in SQL Azure (it's also the default in Umbraco accelerator setup) Microsoft does not officially support it!

Hi Lennart,

Any chance I can get you to email (morten at umbraco dot com) me your findings and how you ended up configuring AppFabric? I'd like to make a note of it for an upcoming update of the Accelerator for Umbraco.

- Morten

Sure Morten, I'll send you the info asap.

Hi Lennart,

Glad you found the issue. Now I understand why I had similar problems!

Kind regards

Can you post the code here? I think I'm having similar issues. I'm just trying to install one of the starter kits, and it always times out.

-C

Hi Chad,

First you will need to download and install the Azure AppFabric SDK (currently v1.5): http://www.microsoft.com/download/en/details.aspx?id=27421

Next you should add the following DLL references to your project (or make sure they are copied to the Umbraco bin folder):

And finally you can configure the session state provider in the web.config, as well instructed here: http://msdn.microsoft.com/en-us/library/windowsazure/gg278339.aspx

Hope that helps, let me know if you have any other questions.

Grtz

L

Defiinitely helped. Thanks!

Hi Lennart,

I've just found this thread while looking into a similar session state provider issues. Was just wondering how the app-fabric session state provider is working out for you, has this given you had any other issues to deal with? Also, are you running into any limitations (i.e sizes or the 10 concurrent connections on smaller size cache).

Also, to others, has anyone tried the Universal providers for asp.net that do officially support azure. Was thinking of trying these, but I'm wondering if it's just the retry logic that they've added or if this will stop the throttling issues i'm seeing?

Cheers,

Rob

Hi Rob,

We have been using the Appfabric cache for session state in all of our projects for close to 6 months now - and we haven't looked back since.

So far we did not run into any limitations using the 128Mb minimum cache size (for web sites with an average of 25-50k visitors per month) but I guess it also much depends on how often you're actually using session state which is usually very limited in our projects (mainly back-office).

We haven't seen any issues with concurrent connections but I believe these connections are well managed by the default session state provider for Appfabric cache.

Grtz

L

Hey all,

So it seems that there are two different things at hand that need clarifying for this very important topic.

One is Windows Server AppFabric Caching ( see http://msdn.microsoft.com/en-us/library/ee790918%28v=azure.10%29.aspx ), and the other is handling transient conditions on SQL Azure. If I’m not mistaken, the AppFabric programming model will take care of the data cached by the application, but it will not change the fact that an SQL query fails to run because the connection was lost. Here lies the question: If a connection is lost, and a query is about to execute, will the AppFabric cache establish the connection once again before it actually runs? This is a very difficult case to replicate, but I believe the answer is no. (a connection was opened successfully, connection is then lost, and then a SQL script runs). Fail, right?

In order for the application to be reliable we must take care of handling transient conditions. For instance, implementing retry policies on all SQL-related methods in order to avoid connectivity issues when using Umbraco with SQL Azure.

Unfortunately still, there is no retry policy implemented in the CMS even though it is a recommended best practice by Microsoft.

For example, check out the source code on Umbraco v4.8 or older.

In the umbraco.presentation project, the umbraco.content.cs method “private XmlDocument LoadContentFromDatabase()” does not have a retry policy, as well as the umbraco.DataLayer.SqlHelper method “public IRecordsReader ExecuteReader(string commandText, params IParameter[] parameters)”.

The retry policy is missing in most, if not all, SQL related methods around the code.

On the upside, implementing such feature might not be such a difficult or time-consuming task for someone that knows their way around the code. To implement this functionality and get Umbraco running with SQL Database (SQL Azure) as Microsoft intends, check out:

Official Best Practices for Windows Azure:

http://www.windowsazure.com/en-us/manage/windows/best-practices/troubleshooting/

(Check out the part on Design to Handle Errors Gracefully)

Windows Azure Storage Client class RetryPolicies Documentation:

http://msdn.microsoft.com/en-us/library/ee758667

Example of a retry policy:

http://msdn.microsoft.com/en-us/library/hh680927%28v=pandp.50%29.aspx

Explanation on the importance of having a retry policy plus other recommendations handling transient conditions:

http://windowsazurecat.com/2010/10/best-practices-for-handling-transient-conditions-in-sql-azure-client-applications/

This last link also discusses throttling. The CMS, or custom code (packages, etc) for that matter, might be affected by throttling when the code runs consecutive SQL connections. For instance, publishing many nodes in a consecutive fashion. If transient conditions occur, it will cause applications running slower than usual because of the way Azure Fabric works.

On the link, pay attention in particular to:

“SQL Azure comes with throttling behaviour the attributes of which can manifest themselves when a client is establishing connections to a SQL Azure database or running queries against it. The database connections can be throttled internally by the SQL Azure fabric for several reasons, such as excessive resource usage, long-running transactions, and possible failover and load balancing actions, leading to termination of a client session or temporary inability to establish new connections while a transient condition persists.”I’m not an Azure expert, but I do believe these issues need to be addressed in order to get Umbraco running safely with SQL Azure.

Please tell me I’m wrong.

Best,

Adi.

Hi Guys,

Is this still a problem in 2013 and V6+?

@Anton, Yes and no. In version 6.1.0 of Umbraco we have fault handling in place for the new datalayer. But unfortunately the old datalayer is still used, so until we have gotten rid of all the usages of the legacy datalayer we don't have 100% support of fault handling on sql azure.

- Morten

Good to hear about the new datalayer fault handling. I'm trying out this currently: Umbraco 6.1, Azure Web Site, standard config (one instance), plus Azure Sql. It was very easy to get up and running, (unzip 6.1, open in WebMatrix, publish to Azure, create a new Azure Sql DB, copy the config setting into Web Site, thats it) and is going very good so far.

@Jonas Are you running on Azure Websites or Azure Virtual Machine? I had issues before with the durability of files etc. on Azure Websites and switched to VMs. In 2013 i'm not sure where we stand on Azure support.

Im using Websites service, so far only used a few hours, I expect problems ... :) will update with reports if I get them.

Hope others with experience can join the discussion aswell.

We are running Umbraco 6.0.5 for weeks in Azure websites (no vm, no webrole). Everything is running fine. Transient failures on Azure SQL are very infrequent (less than once / month).

Websites is good but currently it has its problems which might prevent it from being used for some site:

Hi Lennart,

Sorry to jump onto this post. I currently upgraded to Umbraco 7.2.8 and started seeing this Azure DB issue. I am looking into your solution of installing app fabric but I know for a fact that I don't have any session state stored anywhere.

From your experience do you think it might be something esle causing it?

Thanks

Hi Bilal,

In our case persisting the session data in SQL Azure resulted in frequent roundtrips and many connections from our application to the database which eventually had SQL Azure timeout at random times and for random requests. Using the distributed cache for session state fixed this as it really reduced the load on the database.

Since you are not using sessions the timeouts may be caused by any part of your application that is heavily interacting with the database even though the timeouts may occur sporadically and within a different context.

The main feedback we got from the SQL Azure support team was to implement a SQL retry policy (i.e. the transient fault handling application block) basically telling us to expect SQL Azure to timeout/throttle more often than an on-premise SQL instance would because of its distributed nature.

We managed to implement the transient fault handling fairly quickly into our custom data transactions and from my understanding this retry policy has now also been implemented in the Umbraco data layer. If you have any custom data transactions in your application I would strongly recommend to implement this strategy as well.

Grtz

L

Hi,

Recently I had a similar issue with a Azure hosted website. They are asking to implement retry logic. but I'm not sure from which version onward Umbraco implemented retry logic so I could upgrade the site.

My Umbraco version is 7.1.8

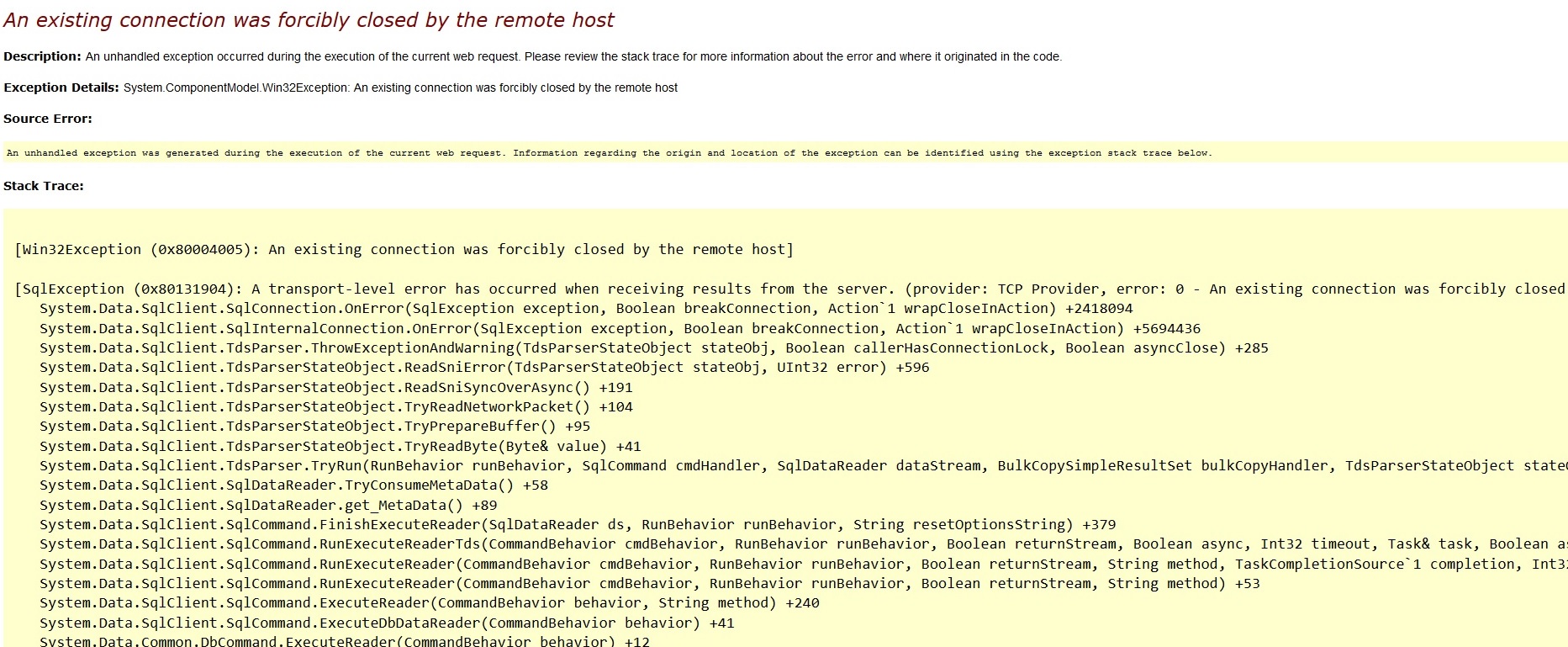

Following is the error message.

Thanks, Madura

I think they added Retry logic around v6 so you should defo have it for v7.

Hi Lennart,

Thanks for your reply and sorry for picking it up late.

There was a piece of code that was causing the issue it wasn;t anything to do with sessions but still that code was hitting the DB thats what I think. I didnt have time to debug the code so commented it out and added my footer navigation manually rather than generating it dynamically using the node tree. Below is that code that was causing issue and any help on why is doing that would be much appreciated!.

Many thanks AD

The call to GetPreValues will hit the database which you definitely want to avoid in a footer navigation. As to why you are getting a transient error remains unclear; I believe that method uses the SQLHelper which should have the retry policy built in.

Avoiding the database call however could require some rearrangement of the content structure (i.e. avoiding dropdowns as data types for footer content). But in the end hitting the Umbraco cache will save you time and improve performance.

We had similar problem with Umbraco website hosted on Azure (with azure sql) . Almost constant database timeouts were driving me crazy. What helped in our case ( at least for the moment it seems like it helped ), was enabling distributedCall in umbracoSettings.config file.

Umbraco 7.3.0, on Azure with load Balancing.

is working on a reply...

This forum is in read-only mode while we transition to the new forum.

You can continue this topic on the new forum by tapping the "Continue discussion" link below.