I'm currently using the builtin Umbraco image processor to generate cropped images.

When I run the site through Google's PageSpeed Insights service, it tells me that many of the images could use lossless compression to reduce their size by over 90%.

Is there anyway to make the image processor compress the images when it creates crops?

The default encoder used by System.Drawing doesn't compress well when encoding images. ImageProcessor wraps around System.Drawing so suffers from the same limitations.

However I have released a plugin that uses various tools to post process images that can be installed via Nuget.

Please bear in mind though that this will add a slight overhead to the processing pipeline so if you have a very high traffic website I would recommend against using it.

this will add a slight overhead to the processing pipeline so if you have a very high traffic website I would recommend against using it.

Do the processed images get cached? Also, according to this website page, "This plugin was deprecated in version 4.4.0 with the functionality enhanced and merged into the core." So this should already been working or needs to be configured?

It does cache the image. The operation acts on the byte stream before saving.

Ignore the docs page, it needs updating. It was merged but Windows Defender incorrectly reports the png compressor as a virus which is super annoying so I had to pull it back out into a plugin.

Is there anything special you need to do to enable the post processing features? I've installed the post processor, cleared the cache and still seeing less than optimal images.

Funny thing is we're using ImageProcessor to serve product images and PageSpeed Insights has no complaints over them (or it simply doesn't pick up on them, that might also be the case).

Soooo.... Turns out upon investigation, jpeg compression was broken and only the System.Drawing output was being saved..

I have fixed that though and just released a shiny new v1.2.0

This not only fixes the issue but replaces the jpeg compressor with mozjpeg so that the output images are as small as possible. I've also replaced the png compressor with TruePng so that we get the smallest output possible there also.

You'll have to delete your cache to see the benefits but the results should be obvious.

Not sure if I did something wrong but it doesn't seem to be working for me. I updated via Nuget (I had the previous version installed). Do I need to do something else to install the unmanaged code or something?

The plugin definitely works. I spent about 10 hours yesterday running comprehensive tests.

Either...

You are seeing the cached results in your browser.You did ctrl+F5 yeah?

Your optimization operations are timing out. (5 sec max)

You are lacking permissions somewhere (Which would cause an exception to be thrown/logged).

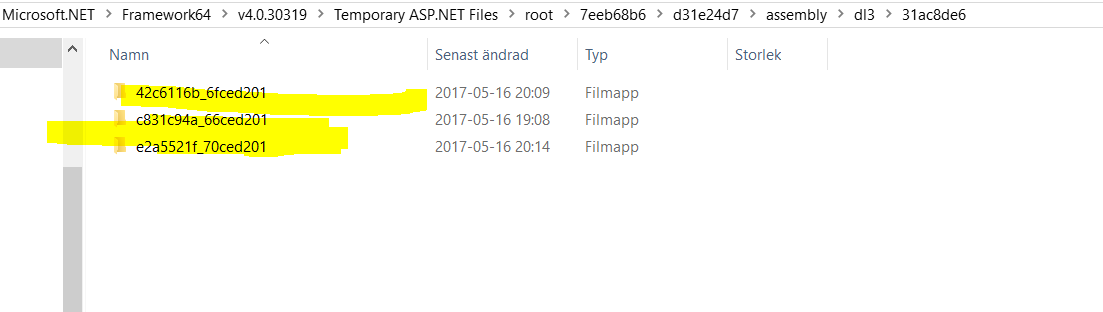

There is nothing required other than installing the package. The resources are bundled within the binary and extracted to your running directory on initialization. You should be able to navigate to your running folder (The dynamically generated one, not what IIS is pointing to) and see them there.

No-one yet has provided information about their setup at all so I can only guess where you are running this.

James

EDIT

I wonder whether a new temporary folder is being created for your applications on rebuild? It should but you never know. Having the same folder could potentially prevent the new resource extraction from taking place.

I'm trying to figure out if there is an issue installing the unmanaged executables (although I don't see anything in the log). You said:

The resources are bundled within the binary and extracted to your running directory on initialization. You should be able to navigate to your running folder (The dynamically generated one, not what IIS is pointing to) and see them there.

Is this referring to the "Temporary ASP.NET Files" for the site? I can't find them on my local computer (where Post Processor works).

I got busy and refactored the PostProcessor to ensure all executables get wiped out on Application Start. I also added a fair whack of logging to the application and the ability to set the timeout for postprocessing images.

// Set the timeout to 10 seconds. (Default 5)

PostProcessorApplicationEvents.SetPostProcessingTimeout(10000);

You can get that update (v1.2.1) on Nuget. All my testing indicates that it works well.

Umbraco contains a log implementation for ImageProcessor that should add any logged events to the normal log so if there are issues they should show up.

You can see the source code for this release here it would be great if someone could review it.

I'm glad someone else reports it working because I still don't see that it is working for me. I didn't see anything in the Umbraco logs (even after setting to DEBUG). I'm running in Umbraco Cloud - not sure how to check the running folder - hopefully it's accessible via Kudu. Unfortunately don't have a lot of time at the moment to spend investigating but will return to it when I can because it is so important.

Thanks James for your support! I'll let you know if I find any issues when I dig deeper.

UPDATE: I did another test - it works locally. Does not seem to work on Umbraco Cloud (I just removed it, restarted the app, deleted the cache folder and my test image loads the same size. I didn't test the same image - but the image that did not get reduced on Cloud does get reduced by 19.8% when I save it to my Mac and run it through ImageOtim, so I presume it should have also gotten smaller with the ImageProcessor PostProcessor.

I just wanted to say that I tested the latest update (v1.2.1) on a few known problem images I've had - which have been uploaded by clients - and saw decent improvements on all.

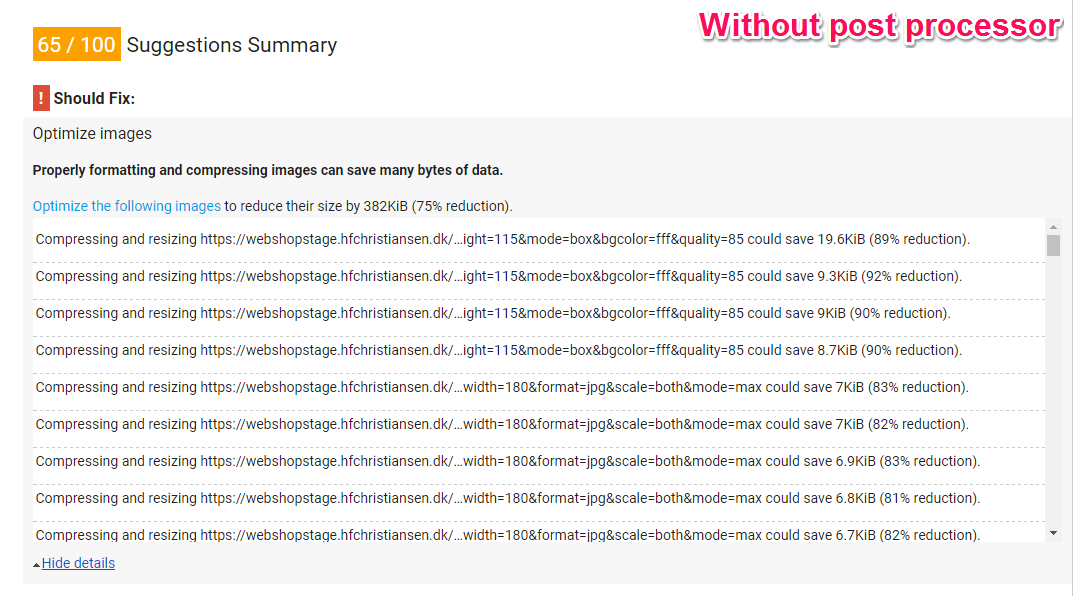

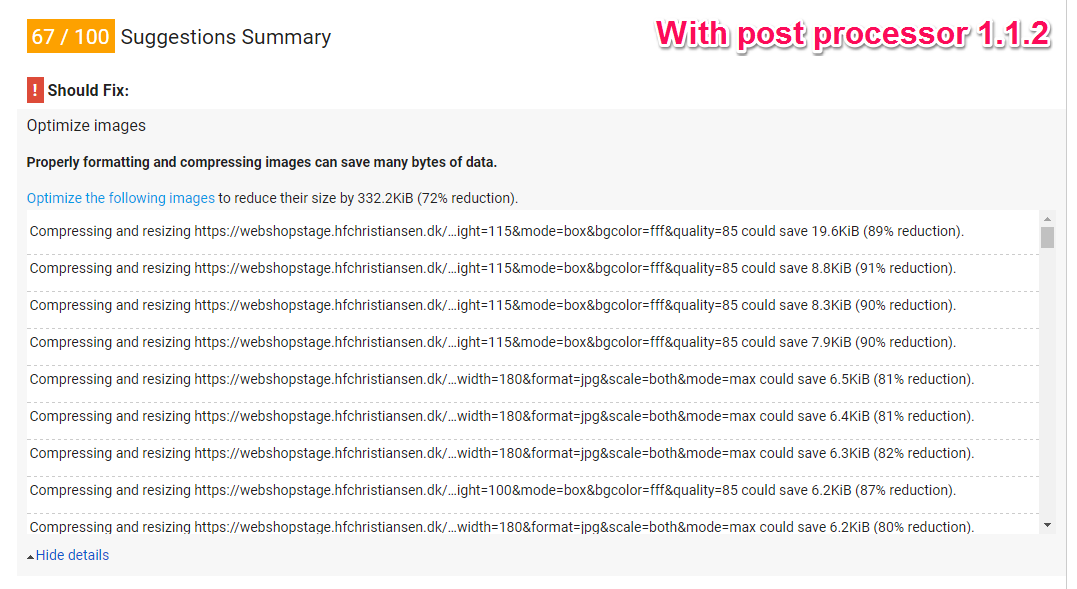

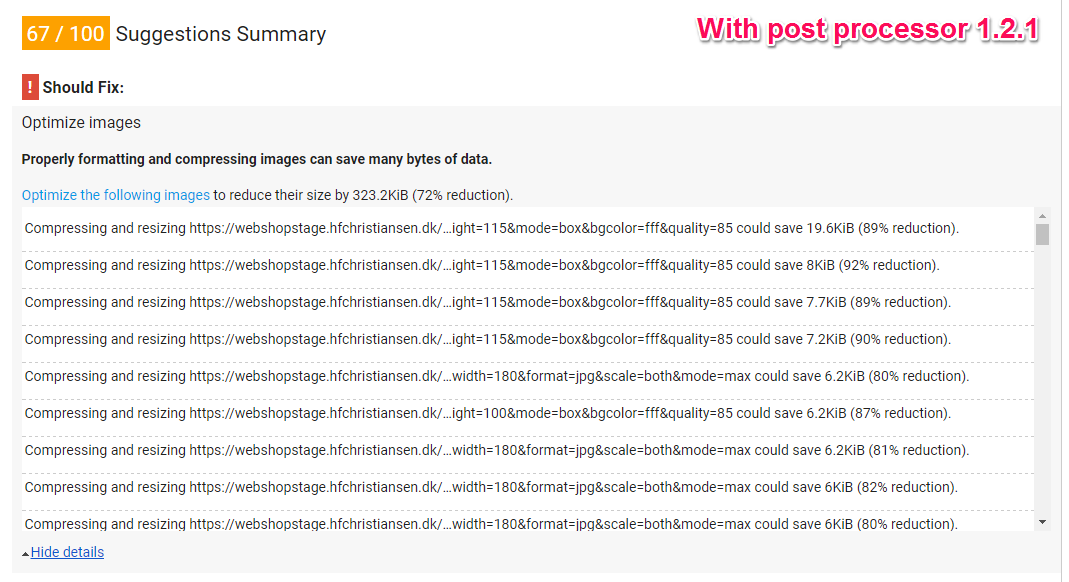

I'm seeing results along the same lines as Alex. The site in question is hosted on Azure. Locally the post processor upgrade from 1.1.2 to 1.2.1 has resulted in a significant improvement. Once deployed it makes very little impact.

We've just run a bunch of tests on the test environment, clearing the cache and restarting the app between each test run. There is a small improvement from 1.1.2 to 1.2.1, but Google still claims the images can be reduced by 80-90%.

Is around the 22 KB mark. However if you switch it's output to JPG (you don't need the transparency attributes of PNG, because you're purposely setting a background colour yourself):

Hey Christopher. Thanks for the input. I should've mentioned that we already tried that :) surprisingly the overall result was a slight increase in the total image size, although that particular image did see quite the improvement.

I checked the logs after my previous post and there was nothing of interest. I'll try adding your code when I'm able. Not sure I'll make it this weekend but as soon as I can, I will.

Alright I added the logging code. Unlike last time I checked the logs, this time actually contains entries from ImageProcessor, so something got wired up.

Unfortunately there are no log entries that offer any real clues. The only log entries are errors that certain source image files for product thumbs couldn't be found in the blob storage - e.g.:

There was a change in the default Umbraco behavior from 7.5+ (One I strongly disagree with; they could have simply changed their individual settings) that enforces the preservation of metadata within images for everyone.

The post processor has to respect that so any metadata is always preserved. PageSpeed doesn't care.

I would check to see if you all have preserveExifMetaData="true" in your processing.config files.

For png files, if you look closely at PageSpeed results it actually tells you that lossy compression will save values. That is true but you have to be very careful with lossy compression as it will degrade the output of the image. That's why I only allow images that can be lossy optimized and maintain 90% image quality when compared to the original.

Well preserveExifMetaData was set to true as you suspected. Setting it to false shaves about 50k off the total image load. But it doesn't stop PageSpeed from complaining. It still claims to be able to optimize the images with around 80% on average.

For example this image: https://webshopstage.hfchristiansen.dk/media/4284/limarlogoflathorizontalpantone.jpg?width=200&height=115&mode=box&bgcolor=fff&quality=85 - apparently PageSpeed thinks it can shave 77% off the size.

I'm curious though... I don't see any progressive loading - is that something I should explicitly enable?

I think this might be PageSpeed being a little useless; if you check the "Mobile" tab you'll see that no images are picked up to be optimised, whereas in "Desktop" it thinks they can be. I've seen similar happen where changes I've made (non-image related) have only shown in one scenario - it's flipping frustrating!

To sanity check this, if you save a local copy of your image:

You'll see they come back with minimal to no optimisations available.

I'm not saying this is the case for all of your images (I made that mistaken assumption in a previous comment!) however I would say don't always believe what PageSpeed is telling you... unfortunately I've not found a way to "clear" the PageSpeed cache when one of the "Mobile/Desktop" tabs gets "stuck" with old data/results.

edit: the editor doesn't like the image url, needed to manually escape the underscores with a backslash

I just checked the image with the repaired url (Thanks Christopher!) using the PageSpeed insights for chrome extension and it reports no issues. It's definitely working.

Regarding progressive. Only jpegs bigger than 10Kb are saved using the progressive standard (That one is 4.3kb). This is because baseline images are 75% more likely to be smaller than progressive equivalents below that threshold.

PageSpeed is a useful guide, but also frustrating due to its inconsistencies.

Website for the company I work at, Agency97 Limited, shows 96/100 when running PageSpeed through Chrome (using the browser extension).

However on the web version, desktop is 94/100 whereas mobile is 64/100. Digging deeper into the noted issues, you'll see that mobile says the loading of "https://fonts.googleapis.com/css?family=Vollkorn:400" should be optimised... it is actually asynchronously loaded, and desktop correctly picks this up, but mobile doesn't for some reason.

Annoyingly the same code/loading technique is used on a clients' site, and PageSpeed correctly picks, resulting in 94/100 on desktop and 84/100 on mobile.

No matter what I do (in code or on the server with last modified/caching etc.) I can't get PageSpeed to clear the low score on mobile for my company's website.

Slightly off-topic rant about there!

On the plus side, the current latest v1.2.1 from James has made improvements across the board for imagery on our sites, so this thread has been beneficial as it seems like a positive upgrade for anyone using it!

// Delete any previous instances to make sure we copy over the new files.

try

{

if (Directory.Exists(path))

{

Directory.Delete(path);

}

Directory.CreateDirectory(path);

}

The delete path-call does not pass the recursive-paramter, and the code blows up saying that the folders contains files.

This is great news! I've tried it out on one site and it works! Thanks Markus for figuring this out! and James for maintaining this package and being so quick to release the fix!

I've seen significant improvements on the images I've inspected that are processed by image processor!

Hey James. I want to install this on a website I'm launching in a week or so.

The site uses your UmbracoFileSystemProviders.Azure package (Which is awesome!)... Will this be a problem? Using post processing in conjunction with the azure file system provider?

If not, the site already has a f**k ton of images which are already cached and run through imageprocessor? How can I trigger them to be recreated and run through the processor compression. Just delete everything in the cache folder?

it works fine with UmbracoFileSystemProviders.Azure

I think you will need to delete the cache to run them through the optimizers (this can make the site slow at first, so it would be good to run through the site to make sure the images get re-cached before launching).

I am having a similar issue where GTMetrix is saying some images can be compressed by up to 95% (and I confirm it can using http://kraken.io)

In fact changing the quality does almost nothing (the 0 quality one looks all grey artifacts and should be 2 Bytes yet the size doesn't change)

Here's the width 300 quality 100 crop:

This forum has image processor so you can apply the quality=0 querystring to this image and see the same results here. (big file low quality)

does PostProcessor only work for images that are generated by image cropper? I'd like any image on my website to be compressed, even if they are not cropped at all.

Also, shouldn't it be a built-in feature of Umbraco to compress all images that are being rendered? Configurable ideally.

Where can I find the logs of PostProcessor? I can't find anything in the \AppData\Logs files. Do I have to enable Logging for PostProcessor? I really miss some documenation about this Plugin...

The PostProcessor plugin processes any image requests captured by the ImageProcessor.Web HttpModule. That means by default any image that contains a command, for example width=400

Hi, I've just tried out the PostProcessor and it works great. However, is it possible to somehow update all of the images that has already been uploaded to the media. I only se a difference on the images after I upload the image again.

I think if you delete the cache it will regenerate the images using the PostProcesser. The cache is often \App_Data\cache but you can check \Config\imageprocessor\cache.config to see where it is.

For optimizing images in Umbraco, by using ImageProcessor with its PostProcessor plugin for dynamic optimization. The plugin is updated and properly configured to compress images effectively. For high-traffic sites, assess the performance impact due to the additional processing overhead.

or use online tool such as https://jpegcompressor.com/ to consider pre-compressing before upload.

Image processor compression

Hi,

I'm currently using the builtin Umbraco image processor to generate cropped images.

When I run the site through Google's PageSpeed Insights service, it tells me that many of the images could use lossless compression to reduce their size by over 90%.

Is there anyway to make the image processor compress the images when it creates crops?

Thanks

Steve

Hi Steve,

Great question. I had a lot of issues with Google's PageSpeed.

Maybe if you compress images before uploading to Umbraco it can help ?

Cheers

Hi Steve,

The default encoder used by System.Drawing doesn't compress well when encoding images. ImageProcessor wraps around System.Drawing so suffers from the same limitations.

However I have released a plugin that uses various tools to post process images that can be installed via Nuget.

https://www.nuget.org/packages/ImageProcessor.Web.PostProcessor/

Please bear in mind though that this will add a slight overhead to the processing pipeline so if you have a very high traffic website I would recommend against using it.

Hope that helps

James

Do the processed images get cached? Also, according to this website page, "This plugin was deprecated in version 4.4.0 with the functionality enhanced and merged into the core." So this should already been working or needs to be configured?

Hi Alex,

It does cache the image. The operation acts on the byte stream before saving.

Ignore the docs page, it needs updating. It was merged but Windows Defender incorrectly reports the png compressor as a virus which is super annoying so I had to pull it back out into a plugin.

Cheers

James

Thanks for the clarification!

Hey James, is the post processor still applicable for Umbraco 7.5+ installed image processor?

Yeah Trevor... It's signature compatible so should work just fine.

Just following up on this 8 months later :-)

Is there anything special you need to do to enable the post processing features? I've installed the post processor, cleared the cache and still seeing less than optimal images.

Thanks Trevor

Hi Trevor,

No nothing required, just install and it'll tap into existing events.

Can you share a url to an image? I'd like to check a few things.

Cheers

James

Hi James,

First and foremost kudos for the whole ImageProcessor thing, couldn't live without it.

We're also seeing the same issue on Umbraco 7.5.x with the following ImageProcessor NuGet packages running (should be somewhat latest):

PageSpeed Insights complains that a bunch of images from /media should be compress-able by up to 91% - for example these:

Funny thing is we're using ImageProcessor to serve product images and PageSpeed Insights has no complaints over them (or it simply doesn't pick up on them, that might also be the case).

Hi Kenn,

Soooo.... Turns out upon investigation, jpeg compression was broken and only the System.Drawing output was being saved..

I have fixed that though and just released a shiny new v1.2.0

This not only fixes the issue but replaces the jpeg compressor with mozjpeg so that the output images are as small as possible. I've also replaced the png compressor with TruePng so that we get the smallest output possible there also.

You'll have to delete your cache to see the benefits but the results should be obvious.

Cheers

James

Awesome, James! I'll take it for a spin as soon as I can - probably sometime today - and get back to you with the results :)

Excellent. Let me know if you have any issues :)

Great - I had suspected that there was something not right but hadn't had time to investigate. Thanks for fixing it James!

James,

Not sure if I did something wrong but it doesn't seem to be working for me. I updated via Nuget (I had the previous version installed). Do I need to do something else to install the unmanaged code or something?

Thanks,

Alex

Hi Alex/James,

I also tried the new version but didn't think it was showing any different results (cleared cache etc).

Cheers, Trevor

The plugin definitely works. I spent about 10 hours yesterday running comprehensive tests.

Either...

There is nothing required other than installing the package. The resources are bundled within the binary and extracted to your running directory on initialization. You should be able to navigate to your running folder (The dynamically generated one, not what IIS is pointing to) and see them there.

No-one yet has provided information about their setup at all so I can only guess where you are running this.

James

EDIT

I wonder whether a new temporary folder is being created for your applications on rebuild? It should but you never know. Having the same folder could potentially prevent the new resource extraction from taking place.

James,

I'm trying to figure out if there is an issue installing the unmanaged executables (although I don't see anything in the log). You said:

Is this referring to the "Temporary ASP.NET Files" for the site? I can't find them on my local computer (where Post Processor works).

Alex

Ok Everyone....

I got busy and refactored the PostProcessor to ensure all executables get wiped out on Application Start. I also added a fair whack of logging to the application and the ability to set the timeout for postprocessing images.

You can get that update (v1.2.1) on Nuget. All my testing indicates that it works well.

Umbraco contains a log implementation for ImageProcessor that should add any logged events to the normal log so if there are issues they should show up.

You can see the source code for this release here it would be great if someone could review it.

Let me know how you get on.

Cheers

James :)

I'm glad someone else reports it working because I still don't see that it is working for me. I didn't see anything in the Umbraco logs (even after setting to DEBUG). I'm running in Umbraco Cloud - not sure how to check the running folder - hopefully it's accessible via Kudu. Unfortunately don't have a lot of time at the moment to spend investigating but will return to it when I can because it is so important.

Thanks James for your support! I'll let you know if I find any issues when I dig deeper.

UPDATE: I did another test - it works locally. Does not seem to work on Umbraco Cloud (I just removed it, restarted the app, deleted the cache folder and my test image loads the same size. I didn't test the same image - but the image that did not get reduced on Cloud does get reduced by 19.8% when I save it to my Mac and run it through ImageOtim, so I presume it should have also gotten smaller with the ImageProcessor PostProcessor.

Are there still no logs within the cloud setup? There should be heavy logging taking place if anything times out or the installation did not work.

I just wanted to say that I tested the latest update (v1.2.1) on a few known problem images I've had - which have been uploaded by clients - and saw decent improvements on all.

I've now deployed it to one of our clients' sites and am no longer seeing any image related notes on Google PageSpeed or GTmetrix.

Thanks for the continued work and improvements on this James!

Yussssssssssssssssssssssssssssssssssssss!!!!

Thanks for testing Christoper. Looks like I've cracked it then.

Cheers

James

I'm seeing results along the same lines as Alex. The site in question is hosted on Azure. Locally the post processor upgrade from 1.1.2 to 1.2.1 has resulted in a significant improvement. Once deployed it makes very little impact.

We've just run a bunch of tests on the test environment, clearing the cache and restarting the app between each test run. There is a small improvement from 1.1.2 to 1.2.1, but Google still claims the images can be reduced by 80-90%.

Here are some screens:

Not sure I can add anything to the local/deploy issue as so far all of mine have been on dedicated boxes, not cloud.

However for what it's worth, if you switch the format of a lot of those images to JPG you'll get much better output.

This image for example:

https://webshopstage.hfchristiansen.dk/media/4295/styrmeyarcher.png?mode=crop&width=200&height=115&mode=box&bgcolor=fff&quality=85

Is around the 22 KB mark. However if you switch it's output to JPG (you don't need the transparency attributes of PNG, because you're purposely setting a background colour yourself):

https://webshopstage.hfchristiansen.dk/media/4295/styrmeyarcher.png?mode=crop&width=200&height=115&mode=box&bgcolor=fff&quality=85&format=jpg

That knocks it down to around 6 KB.

Side-by-side (on that image at least) there is negligible quality loss, certainly not in comparison to the file size savings.

Hey Christopher. Thanks for the input. I should've mentioned that we already tried that :) surprisingly the overall result was a slight increase in the total image size, although that particular image did see quite the improvement.

Any logging?

The Umbraco logger should be attached automatically but maybe something in Azure is not allowing it.

Could someone try adding this code to their solution?

Cheers

James

Hey James,

I checked the logs after my previous post and there was nothing of interest. I'll try adding your code when I'm able. Not sure I'll make it this weekend but as soon as I can, I will.

Thanks!

Alright I added the logging code. Unlike last time I checked the logs, this time actually contains entries from ImageProcessor, so something got wired up.

Unfortunately there are no log entries that offer any real clues. The only log entries are errors that certain source image files for product thumbs couldn't be found in the blob storage - e.g.:

Looks like PostProcessor isn't logging anything.

I hope this sparks some kind of ideas on your end?

Thanks for wiring it up. Looks like I'm gonna have to set up an Azure instance to see what is going on.

So I updated my blog to use the latest of everything and here's what I found. It's hosted on Azure.

This jpeg image here is definitely getting post-processed as it's progressive.

http://jamessouth.me/media/1001/me.jpg?mode=max&rnd=131386283760000000&width=334

PageSpeed says I can save data though, why?

The answer is metadata... EXIF, XMP etc.

There was a change in the default Umbraco behavior from 7.5+ (One I strongly disagree with; they could have simply changed their individual settings) that enforces the preservation of metadata within images for everyone.

http://issues.umbraco.org/issue/U4-9333

The post processor has to respect that so any metadata is always preserved. PageSpeed doesn't care.

I would check to see if you all have

preserveExifMetaData="true"in your processing.config files.For png files, if you look closely at PageSpeed results it actually tells you that lossy compression will save values. That is true but you have to be very careful with lossy compression as it will degrade the output of the image. That's why I only allow images that can be lossy optimized and maintain 90% image quality when compared to the original.

Hey James

Thank you for all your efforts. I'll try applying your findings to my site as soon as I can and I'll get back to you with the results.

Best of luck , let me know how you get on.

Well

preserveExifMetaDatawas set totrueas you suspected. Setting it tofalseshaves about 50k off the total image load. But it doesn't stop PageSpeed from complaining. It still claims to be able to optimize the images with around 80% on average.For example this image: https://webshopstage.hfchristiansen.dk/media/4284/limarlogoflathorizontalpantone.jpg?width=200&height=115&mode=box&bgcolor=fff&quality=85 - apparently PageSpeed thinks it can shave 77% off the size.

I'm curious though... I don't see any progressive loading - is that something I should explicitly enable?

I think this might be PageSpeed being a little useless; if you check the "Mobile" tab you'll see that no images are picked up to be optimised, whereas in "Desktop" it thinks they can be. I've seen similar happen where changes I've made (non-image related) have only shown in one scenario - it's flipping frustrating!

To sanity check this, if you save a local copy of your image:

https://webshopstage.hfchristiansen.dk/media/4284/limar_logo_flat_horizontal_pantone.jpg?mode=crop&width=200&height=115&mode=box&bgcolor=fff&quality=85

(right-click, save as)

Now run it manually through some online optmisation tools, i.e.

You'll see they come back with minimal to no optimisations available.

I'm not saying this is the case for all of your images (I made that mistaken assumption in a previous comment!) however I would say don't always believe what PageSpeed is telling you... unfortunately I've not found a way to "clear" the PageSpeed cache when one of the "Mobile/Desktop" tabs gets "stuck" with old data/results.

edit: the editor doesn't like the image url, needed to manually escape the underscores with a backslash

I just checked the image with the repaired url (Thanks Christopher!) using the PageSpeed insights for chrome extension and it reports no issues. It's definitely working.

Regarding progressive. Only jpegs bigger than 10Kb are saved using the progressive standard (That one is 4.3kb). This is because baseline images are 75% more likely to be smaller than progressive equivalents below that threshold.

See https://yuiblog.com/blog/2008/12/05/imageopt-4/ for more information on that.

Thanks you guys. I'm puzzled that PageSpeed isn't that dependable... one would think Google uses it for scoring pages.

Anywhichway I'm sorry to have taken up so much time. I hope this thread will help others down the road.

Thanks again!

PageSpeed is a useful guide, but also frustrating due to its inconsistencies.

Website for the company I work at, Agency97 Limited, shows 96/100 when running PageSpeed through Chrome (using the browser extension).

However on the web version, desktop is 94/100 whereas mobile is 64/100. Digging deeper into the noted issues, you'll see that mobile says the loading of "https://fonts.googleapis.com/css?family=Vollkorn:400" should be optimised... it is actually asynchronously loaded, and desktop correctly picks this up, but mobile doesn't for some reason.

Annoyingly the same code/loading technique is used on a clients' site, and PageSpeed correctly picks, resulting in 94/100 on desktop and 84/100 on mobile.

No matter what I do (in code or on the server with last modified/caching etc.) I can't get PageSpeed to clear the low score on mobile for my company's website.

Slightly off-topic rant about there!

On the plus side, the current latest v1.2.1 from James has made improvements across the board for imagery on our sites, so this thread has been beneficial as it seems like a positive upgrade for anyone using it!

When I run the plugin it worked 1-2 times then the this row starts to return false:

if (!PostProcessorBootstrapper.Instance.IsInstalled)

I can't really figure out why...? =/

Seems like there are multiple "versions" installed in the temp-directory of windows.

All these folders contains the same files, the DLL, a ini-file and a folder called "imageprocessor.postprocessor1.2.1.0" with all the exe-files in it.

It's really strange that it works once/twice but not again?

I think i got it.

The delete path-call does not pass the recursive-paramter, and the code blows up saying that the folders contains files.

I've changed the row to

And now it seams to work on every request.

EDIT: PR: https://github.com/JimBobSquarePants/ImageProcessor/pull/591

Good work!

I've released v1.2.2 with your fix. On Nuget now :)

This is great news! I've tried it out on one site and it works! Thanks Markus for figuring this out! and James for maintaining this package and being so quick to release the fix!

I've seen significant improvements on the images I've inspected that are processed by image processor!

Hey James. I want to install this on a website I'm launching in a week or so.

The site uses your UmbracoFileSystemProviders.Azure package (Which is awesome!)... Will this be a problem? Using post processing in conjunction with the azure file system provider?

If not, the site already has a f**k ton of images which are already cached and run through imageprocessor? How can I trigger them to be recreated and run through the processor compression. Just delete everything in the cache folder?

In my experience:

Also, if you haven't done this yet, I believe it's recommended to configure it so the images are stored outside of the web root (see https://github.com/JimBobSquarePants/ImageProcessor/issues/518 for details).

Hi Lee,

Alex has covered everything correctly (h5yr!). Any issues though just give me a shout.

Cheers

James

Have you run the cache outside the web root? I'm dubious about permissions doing this and whether I'll have issues.

Yes - but just for two recent sites which are on Umbraco Cloud. Seems to work fine there (after we fixed a bug).

I am having a similar issue where GTMetrix is saying some images can be compressed by up to 95% (and I confirm it can using http://kraken.io) In fact changing the quality does almost nothing (the 0 quality one looks all grey artifacts and should be 2 Bytes yet the size doesn't change)

Here's the width 300 quality 100 crop:

This forum has image processor so you can apply the quality=0 querystring to this image and see the same results here. (big file low quality)

I have these packages:

and this log4net.config

In my global.asax.cs I have:

I searched for ImageProc in my App_Data/Logs/* and didn't find anything.

I'm working locally (not in Azure)

Any suggestions what I can try next?

Hi,

does PostProcessor only work for images that are generated by image cropper? I'd like any image on my website to be compressed, even if they are not cropped at all.

Daniel

Hi guys,

does someone has any information on this?

Also, shouldn't it be a built-in feature of Umbraco to compress all images that are being rendered? Configurable ideally.

Where can I find the logs of PostProcessor? I can't find anything in the \AppData\Logs files. Do I have to enable Logging for PostProcessor? I really miss some documenation about this Plugin...

I'd be glad to get some help :)

Daniel

The PostProcessor plugin processes any image requests captured by the ImageProcessor.Web HttpModule. That means by default any image that contains a command, for example

width=400http://imageprocessor.org/imageprocessor-web/plugins/postprocessor/

It's not a built in feature because:

Hi, I've just tried out the PostProcessor and it works great. However, is it possible to somehow update all of the images that has already been uploaded to the media. I only se a difference on the images after I upload the image again.

Best regards

I think if you delete the cache it will regenerate the images using the PostProcesser. The cache is often

\App_Data\cachebut you can check\Config\imageprocessor\cache.configto see where it is.Tack Alex, I will try that and come back with update.

Awsome, it works. Have a nice day!

For optimizing images in Umbraco, by using ImageProcessor with its PostProcessor plugin for dynamic optimization. The plugin is updated and properly configured to compress images effectively. For high-traffic sites, assess the performance impact due to the additional processing overhead. or use online tool such as https://jpegcompressor.com/ to consider pre-compressing before upload.

is working on a reply...

This forum is in read-only mode while we transition to the new forum.

You can continue this topic on the new forum by tapping the "Continue discussion" button below.

Continue discussion